Other monitoring and logging solutions

BigAnimal provides a Prometheus-compatible endpoint you can use to connect to your own monitoring infrastructure as well as Postgres logs via blob storage.

To enable this on your clusters, contact BigAnimal Support.

Metrics

You can access metrics in a Prometheus format if you request to have this feature enabled via BigAnimal Support. You can retrieve the hostname and port for your clusters by using the Prometheus URL available on the Monitoring and logging tab on each cluster's detail page in the BigAnimal portal.

We have provided some example metrics to help get you started.

Patterns for accessing metrics

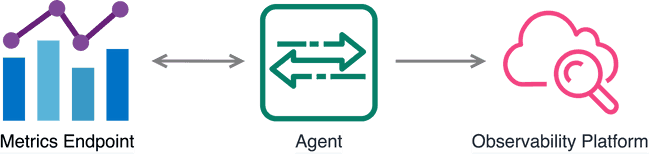

A common pattern for metric shipping is to have the vendor-supplied agent scrape the metrics endpoint and send the metrics to the desired platform.

Here are links for more information on some common monitoring services:

Self-managed Grafana (not Grafana Cloud): Grafana Prometheus datasource

Logs

You can view your logs in your cloud provider's blob storage solution if you request to have this feature enabled via BigAnimal Support. You can retrieve the location of your object storage on the Monitoring and logging tab on your cluster's detail page in the BigAnimal portal.

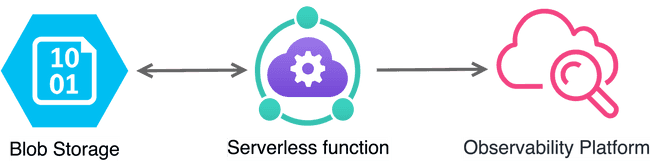

The general pattern for getting logs from blob storage in to the cloud provider's solution is to write a custom serverless function that watches the blob storage and uploads to the desired solution.

Watching for logs on AWS

You could leverage some Python code to read the S3 bucket, then use the AWS APIs to upload to your custom monitoring solution. For example, CloudWatch: aws-load-balancer-logs-to-cloudwatch.

Watching for logs on Azure

You could leverage Azure Functions to read the Azure Blob Storage object, then use the API to upload the data to your monitoring solution.

Uploading logs to common third-party providers

Once your function has observed new log data, you can use your monitoring provider's API to push log data to their platform.

Some platform providers have limitations regarding the ingestion of logs. Read the vendor documentation carefully.

Datadog

Dynatrace

AWS: S3 log forwarder

Azure: Azure log forwarder

Warning

At the time of writing, the Dynatrace integration only works if you can stream the logs from Azure Storage to Azure event hub.

New Relic

CLI command

You can also get the metrics and logs URLs from the show-cluster-monitoring-urls CLI command. See Logging and metrics CLI command, for more information.

- On this page

- Metrics

- Logs

- CLI command